Tasks with dependencies on this legacy replication service couldn’t use Task Sensors to check if their data is ready. While external services can GET Task Instances from Airflow, they unfortunately can’t POST them.

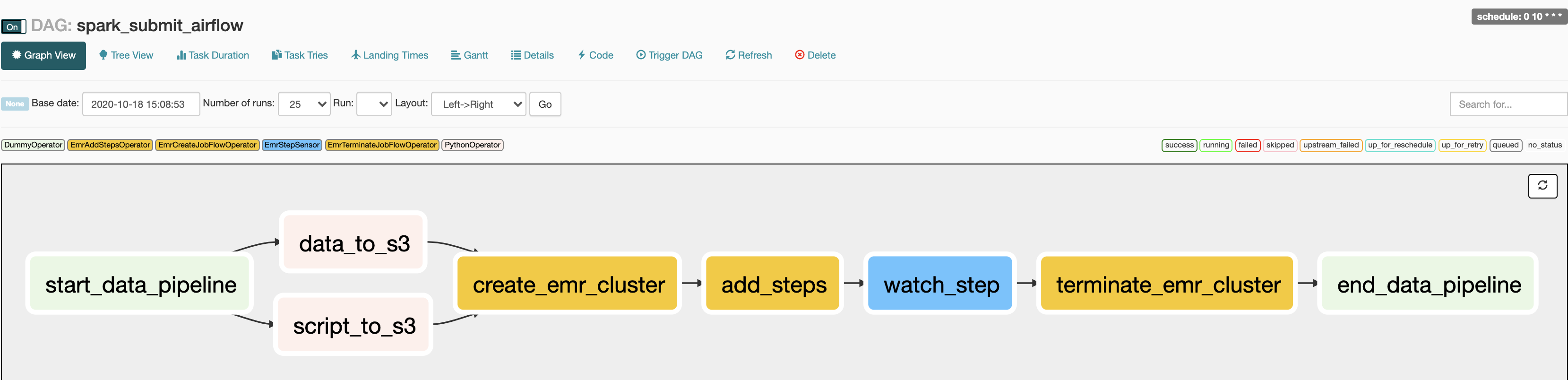

However, what if the upstream dependency is outside of Airflow? For example, perhaps your company has a legacy service for replicating tables from microservices into a central analytics database, and you don’t plan on migrating it to Airflow. You could use this to ensure your Dashboards and Reports wait to run until the tables they query are ready. Even better, the Task Dependency Graph can be extended to downstream dependencies outside of Airflow! Airflow provides an experimental REST API, which other applications can use to check the status of tasks. The External Task Sensor is an obvious win from a data integrity perspective. Sql="SELECT * FROM table WHERE created_at_month = '`", The names of the connections that you pass into these parameters should be entered into your airflow connections screen and the operator should then connect to the right source and target. # Run SQL in BigQuery and export results to a tableįrom _operator import BigQueryOperatorĭestination_dataset_table='', This operator takes two parameters: googlecloudstorageconnid and destawsconnid.

0 kommentar(er)

0 kommentar(er)